- Blog Post

Guidelines for Selecting Sensor Quantity and Optimal Placement in Area Temperature Mapping

Temperature mapping is a fundamental requirement in pharmaceutical manufacturing, biotech laboratories, and regulated storage environments. Whether validating a cold room, stability chamber, warehouse, or transport system, the accuracy of your mapping study depends heavily on two variables: how many temperature dataloggers you use and where and how you place them. A poorly designed study may appear compliant on paper while silently allowing temperature excursions that compromise product quality, regulatory standing, and patient safety.

In today’s compliance-driven landscape, regulators expect scientific justification rather than arbitrary sensor placement. Guidelines from WHO, ISPE, and EN 60068 increasingly emphasize risk-based thinking approach supported by reliable validation equipment. This article explains how to decide the number of sensors and placement of temperature sensors using grid-based methods like 9-point and 27-point layouts, risk-based strategies, and practical deployment logic. The objective here: help you design defensible, efficient, and audit-ready temperature validation process using proven principles and modern wireless data logger technology.

Understanding Temperature Mapping Fundamentals

At its core, temperature mapping quantifies spatial and temporal temperature variations within a controlled environment, ranging from compact stability chambers to large-scale warehouses. The objective is not simply to record temperatures, but to prove, with data, that defined limits are consistently maintained under empty, loaded, and worst-case operating conditions. In regulated environments, this evidence forms a critical part of thermal validation and ongoing compliance.

A well-executed temperature mapping study supports multiple validation and quality objectives:

- Thermal validation of storage, processing, and distribution areas

- Qualification and performance verification of HVAC-controlled environments

- Demonstration of compliance with GMP, GDP, WHO, and ISO requirements

- Identification and mitigation of risks to protect temperature-sensitive products

Accurate insights depend on the capability of the temperature dataloggers used. Modern wireless temperature data loggers and real time temperature data loggers capture high-resolution data across time and area without the constraints of hardwired systems. When deployed as part of a validated temperature validation system, these devices expose critical behaviours such as hot and cold spots, vertical stratification, recovery times after door openings, and the influence of airflow dynamics. These findings often reveal that apparent uniformity masks localized risks that static monitoring sensors fail to detect.

Traditional Grid Methods: Reliable Starting Points for Logger Deployment

Grid-based methods provide a structured and regulator-friendly foundation for temperature mapping studies. By dividing areas into defined geometric points, these approaches aim to ensure comprehensive spatial coverage and repeatability. They are particularly effective in smaller, well-defined environments where thermal risks are relatively uniform and operating conditions are predictable. Rooted in long-standing guidance such as WHO Technical Report Series 961 and reinforced by EN 60068, grid-based layouts remain a defensible starting point during initial qualification and requalification activities.

The 9-Point Method

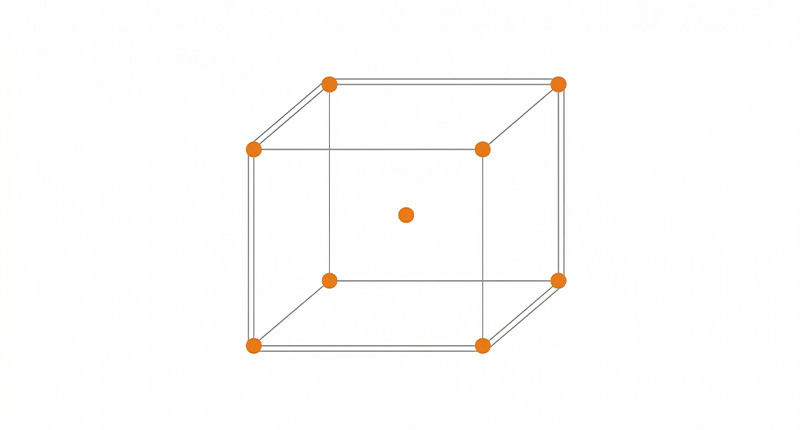

The 9-point method is widely applied to small chambers, incubators, refrigerators, and freezers where internal volumes are limited, and airflow patterns are relatively stable. The approach is based on a two-dimensional 3×3 matrix that captures lateral temperature distribution.

Typical placement includes:

- Four corner positions to capture perimeter extremes

- Four mid-wall locations to assess boundary influence

- One central point representing average conditions

In practice, vertical arrangement must also be considered. If chamber height exceeds approximately 2 to 3 meters, or if forced airflow is inconsistent, the grid should be replicated at multiple vertical levels to capture buoyancy-driven gradients.

From a calculation standpoint, the usable internal volume should be clearly defined before deployment. Non-product zones, dead spaces, or permanently unused areas should be excluded. For example, in a 1.5 m × 1.5 m × 2 m stability chamber, nine temperature dataloggers are typically sufficient when distributed across floor, mid-height, and upper zones. At least one temperature sensor should be positioned near the control or reference probe to provide a meaningful performance comparison.

Key strengths of the 9-point method include:

- Clear alignment with WHO and EN 60068 expectations for small volumes

- Straightforward documentation and audit defensibility

- Rapid setup and analysis for initial qualification

However, grid-based symmetry alone does not account for site-specific airflow behaviour. Smoke studies or airflow visualization should be performed where possible to validate assumptions and refine placement near supply vents or return paths. For hygroscopic products, combining temperature sensors with humidity sensors further strengthens thermal validation outcomes.

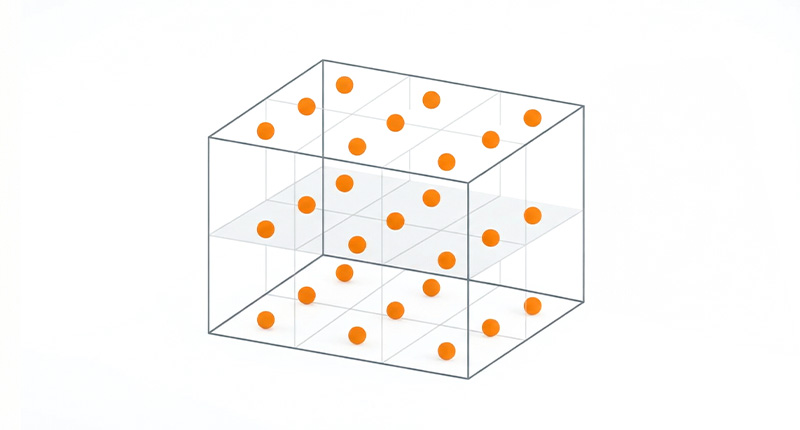

The 27-Point Method

As internal volume increases, horizontal-only coverage becomes insufficient. The 27-point method extends the grid into three dimensions using a 3×3×3 configuration, making it suitable for cold rooms and mid-sized storage areas.

This approach distributes sensors across:

- Lower levels representing product bases or floor storage

- Mid-levels aligned with standard shelving heights

- Upper zones where heat accumulation and stratification are most likely

Calculation is driven by room geometry. Length, width, and height are divided into three equal segments to determine sensor spacing. For instance, a 6 m × 6 m × 3 m room yields measurement points at roughly 2 m intervals horizontally and 1 m vertically, resulting in 27 primary positions. Additional data loggers are often justified near doors, loading areas, or other dynamic interfaces where temperature excursions are most likely.

The 27-point grid aligns well with USP <1079.4> principles for good storage practices by deliberately targeting extremes rather than averages. In real-world applications, this method frequently reveals temperature differentials of 1 to 2 °C under loaded conditions, prompting targeted HVAC adjustments or procedural controls.

While scalable and robust, the limitation of the 27-point method lies in potential redundancy within thermally stable zones. As facilities grow in size or complexity, transitioning from pure grid-based layouts to risk-based placement strategies becomes essential to maintain efficiency without sacrificing compliance.

Steps to Define Data Logger Placement for Your Specific Environment

Defining data logger placement should follow a structured, evidence-driven sequence that supports both temperature mapping and broader temperature validation activities. Regulators expect placement decisions to be traceable, logical, and directly linked to product exposure, process conditions, and intended use of the validated area.

- Define the usable and validated volume

Clearly identify the area subject to temperature validation, excluding non-product or inactive zones so measurements reflect true exposure during normal and worst-case operation. - Identify product storage, process heights, and load characteristics

Align logger placement with how products, materials, or components are stored or processed, considering shelving levels, pallet heights, load density, and thermal mass. - Review HVAC design and control strategy

Position loggers to reflect airflow behaviour, control probe influence, supply and return paths, and any areas where temperature control is most challenged. - Assess operational and process-related influences

Account for door openings, loading and unloading cycles, equipment operation, personnel movement, and other routine activities that affect temperature stability during validation. - Select a baseline placement model

Apply an appropriate grid-based approach, such as 9-point or 27-point layouts, to establish consistent baseline coverage suitable for initial temperature qualification. - Overlay risk-based and validation-driven adjustments

Refine logger density and positioning based on identified risks, historical deviation data, pilot studies, and validation objectives to ensure worst-case conditions are adequately challenged.

This combined grid-based and risk-driven methodology supports both temperature mapping studies and full temperature validation by delivering placement strategies that are practical, scalable, and defensible during inspections.

Applying Risk-Based Logic to Optimize Data Logger Placement

While grid-based layouts provide structure, temperature validation requires a deeper focus on how and where control failures are most likely to occur. Risk-based placement shifts emphasis from geometric symmetry to failure probability, ensuring that temperature dataloggers are concentrated in zones most likely to challenge validated limits during routine and stressed operation. ISPE guidance formally supports this approach as part of modern, science-driven temperature validation.

Conducting a Structured Risk Assessment

A risk-based validation study begins with a detailed assessment of the environment and process rather than a predefined sensor layout. The objective is to understand how heat enters, distributes within, and exits the area under real operating conditions.

Key assessment activities include:

- Reviewing room layout, shelving, racking, doors, vents, drains, and heat-generating equipment

- Evaluating HVAC design, air change rates, control probe placement, and recovery performance

- Assessing operational behaviour such as loading frequency, door open duration, equipment cycles, and maintenance access

- Analysing historical deviations, excursions, and prior validation outcomes

Risk categorization should be explicit and documented. High-risk zones often include frequently accessed doors, loading interfaces, or areas near control limitations. Medium-risk zones may involve perimeter walls or external heat influence, while low-risk zones are typically insulated core areas with stable airflow.

Quantification is commonly performed using tools such as Failure Mode and Effects Analysis, where likelihood, severity, and detectability are scored to produce a risk priority ranking. Areas exceeding predefined thresholds warrant increased data logger density. In large controlled spaces, a baseline density may be supplemented with additional sensors at each identified high-risk feature.

Advanced validation programs may also employ airflow studies or computational fluid dynamics models to support placement decisions. When combined with temperature and pressure data, these analyses provide a more complete understanding of environmental behaviour during validation.

Placement Principles in Risk-Driven Temperature Mapping

Risk-based placement prioritizes product and process exposure over spatial symmetry. Temperature dataloggers should be positioned at representative heights corresponding to actual storage or process conditions:

- Lower levels near floor storage or pallets

- Mid-levels aligned with primary shelving or working heights

- Upper levels where warm air accumulation or stratification is most likely

In high-impact zones, clustered placement is often justified. Deploying multiple loggers at a single interface, such as inside the room, at the doorway, and just outside the threshold, captures transient excursions and recovery behaviour that single-point measurements may miss.

Logger density is adjusted dynamically based on room size, complexity, and validation risk. Smaller environments may require higher density in critical zones, while larger, stable areas may justify wider spacing. Validation typically includes pilot runs followed by data review and iterative refinement to confirm that worst-case conditions have been effectively challenged.

When applied at scale, this approach improves both efficiency and confidence. Facilities often reduce unnecessary sensor deployment while strengthening validation outcomes, reallocating resources toward ongoing monitoring and control. Wireless data loggers are particularly valuable in temperature validation, as they support rapid repositioning and adaptation as risk profiles evolve.

EN 60068 for Data Logger Placement: Precision in Environmental Testing

EN 60068 is a principle-based IEC standard used during qualification of climatic and temperature-controlled chambers. It does not prescribe fixed sensor counts such as 9-point or 27-point layouts. Instead, it requires that temperature measurement locations adequately represent the usable test volume and capture worst-case conditions.

In practical application, industry commonly interprets EN 60068 through structured layouts supported by technical justification:

- Grid-based layouts are often used as a baseline and refined based on chamber size, airflow behaviour, and test severity

- Smaller chambers typically apply a minimum of nine points as an accepted industry norm for spatial coverage

- Larger volumes require proportionally more sensors, with increased focus on vertical temperature gradients

Accuracy requirements are defined by the specific test method and severity class, not by a single universal tolerance. Temperature sensors must therefore demonstrate appropriate accuracy and traceability, supported by calibration and documented measurement systems.

Placement under EN 60068 prioritizes:

- Identification of temperature extremes rather than averages

- Evaluation under worst-case operating conditions

- Assessment of both steady-state stability and transient behaviour

In regulated environments, EN 60068 is applied alongside WHO, ISPE, and GMP guidance. Consistent alignment between these frameworks, supported by clear justification and documentation, is essential for inspection readiness.

Practical Toolkit: Step-by-Step Calculation and Deployment

This section translates methodology into execution, focusing on repeatability, traceability, and audit defensibility. The objective is to move from conceptual placement logic to a documented, regulator-ready deployment.

Calculating the Number of Data Loggers

There is no single formula accepted across all environments, but calculation should follow a transparent logic that combines geometry with risk.

Baseline approaches typically include:

- Small chambers and equipment: 9 to 15 temperature dataloggers using structured grid layouts

- Cold rooms and controlled areas: 15 to 30 temperature data loggers depending on usable volume and airflow complexity

- Warehouses and large storage zones: approximately one temperature logger per 25 to 50 square meters, refined by risk factors

Grid-based estimates may be supplemented by risk adjustments, increasing logger density near doors, HVAC interfaces, or historically unstable zones. Tools such as spreadsheets or validated mapping software are commonly used to simulate counts and document rationale.

Model selection is equally important. Wireless temperature data loggers provide flexibility in dynamic or high-traffic environments, while fixed or chamber-optimized loggers may be appropriate where access is limited and conditions are stable.

Strategic Placement Guidelines

Once quantity is defined, placement should reflect both spatial coverage and thermal behaviour:

- Horizontal spacing is typically tighter in variable zones and wider in thermally stable areas

- Vertical placement should represent product exposure at lower, mid, and upper storage levels

- High-risk interfaces often justify clustered measurements rather than single points

Common placement refinements include:

- Doors: dual loggers positioned at different heights to capture transient ingress effects

- Supply or return vents: sensors offset from direct airflow to measure mixed air conditions

- Racking and shelving: mid-shelf placement aligned with actual product locations, avoiding direct contact

All logger positions should be recorded using coordinates, diagrams, or photographs, supported by written justification. A simple deployment matrix linking each position to its rationale strengthens traceability during audits.

Execution: From Setup to Insights

Prior to deployment, all temperature loggers should be verified using a calibrated temperature sensor master to confirm accuracy and traceability. Calibration status must be current and documented.

Mapping studies are typically executed over 48 to 96 hours and should include:

- An empty or baseline condition

- A fully loaded or representative worst-case condition

- Additional seasonal or operational scenarios where required

Data review focuses on identifying temperature extremes, stability trends, recovery behavior after disturbances, and overall compliance with acceptance criteria. Modern temperature validation systems support automated analysis, graphical visualization, and compliant reporting, enabling efficient interpretation and inspection-ready documentation.

Enhancing with Kaye Innovations

Kaye Instruments has long been recognized for precision validation equipment engineered specifically for regulated pharmaceutical, biotech, and life science environments. Kaye’s temperature validation systems are designed to support both traditional grid-based mapping and advanced risk-based strategies, enabling organizations to meet WHO, ISPE, and GMP expectations with confidence.

At the core of Kaye’s portfolio are its wireless temperature data loggers, which eliminate cabling constraints and simplify deployment in complex or high-traffic environments. Solutions such as the ValProbe® RT wireless temperature data loggers allow precise placement at true product locations, supporting accurate thermal validation in cold rooms, stability chambers, warehouses, and transport systems.

Key capabilities that strengthen temperature mapping and validation studies include:

- High-accuracy temperature dataloggers with calibrated, traceable sensors suitable for critical temperature validation applications

- Wireless and real time data logger functionality, enabling uninterrupted data capture and immediate visibility during mapping studies

- Integrated validation software, for secure data collection, automated analysis, and compliant reporting

- Scalable validation equipment supporting small chambers, large controlled rooms, and multi-zone warehouse environments

These solutions integrate seamlessly into a complete temperature validation system, allowing users to move efficiently from study execution to inspection-ready documentation. Advanced reporting features support trend analysis, hotspot identification, and clear justification of data logger placement decisions.

By combining flexible wireless data loggers, robust software, and proven validation expertise, Kaye Instruments enables organizations to design defensible temperature mapping studies that align with regulatory expectations while reducing study time, operational disruption, and overall validation effort.

Key Takeaways

- The number and placement of temperature dataloggers directly determine the credibility and regulatory acceptance of a temperature mapping study.

- There is no universal calculation model; defensible studies combine grid-based layouts with risk-based placement strategies.

- Traditional 9-point and 27-point grid methods provide reliable baseline coverage for chambers and controlled environments.

- Risk-based approaches focus logger density on high-risk zones such as doors, HVAC interfaces, perimeter walls, and high-traffic areas.

- Vertical placement is as critical as horizontal coverage, particularly in spaces with shelving, palletized loads, or airflow stratification.

- WHO, ISPE, and EN 60068 guidance prioritize scientific justification and documentation over fixed sensor counts.

- Wireless temperature data loggers enable flexible placement, easier repositioning, and real time visibility during mapping studies.

- Calibration traceability and documented placement rationale are essential for audit readiness and long-term validation confidence.

- Integrated temperature validation systems improve efficiency, data integrity, and inspection outcomes.

Conclusion: Validate with Confidence

An effective temperature mapping study reduces uncertainty in environments where even minor deviations can carry regulatory and product quality consequences. Determining the right number of temperature dataloggers and placing them with intent elevates mapping from a procedural task to a meaningful validation exercise. Grid-based methods such as 9-point and 27-point layouts provide structured baseline coverage, while risk-based strategies focus attention on areas most likely to challenge thermal control. Standards such as EN 60068 reinforce this approach by emphasizing representativeness, repeatability, and worst-case evaluation rather than rigid formulas.

Across chambers, cold rooms, warehouses, and transport systems, the unifying requirement is clear justification. Regulators expect placement decisions to be supported by calibrated measurement systems, documented rationale, and sound engineering judgment. By combining proven validation principles with reliable temperature validation systems, organizations can generate mapping data that supports compliance, protects product integrity, and builds long-term confidence in environmental control. Kaye Instruments supports this process with high-accuracy temperature dataloggers, flexible wireless solutions, and compliant software designed for regulated industries.

Copyright: Amphenol Corporation